Creating consumer value with smart Wi-Fi

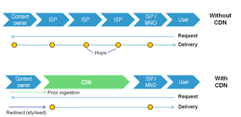

Wi-Fi is central to the value proposition of home connectivity, but can hamper good broadband experience. Smart Wi-Fi services can address consumer pain points, and build new value by enabling a suite of advanced services and establishing a stronger telco presence in the connected home.