Future Skills Tracker report

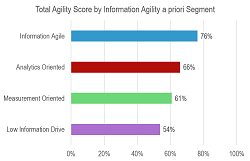

Telcos need new skills to change their organisations to compete like technology companies. Insights from our Future skills tracker tool show that telcos are behind techcos in the penetration of key skillsets and provides direction on the capabilities required to become more innovative, agile and software-driven overall.